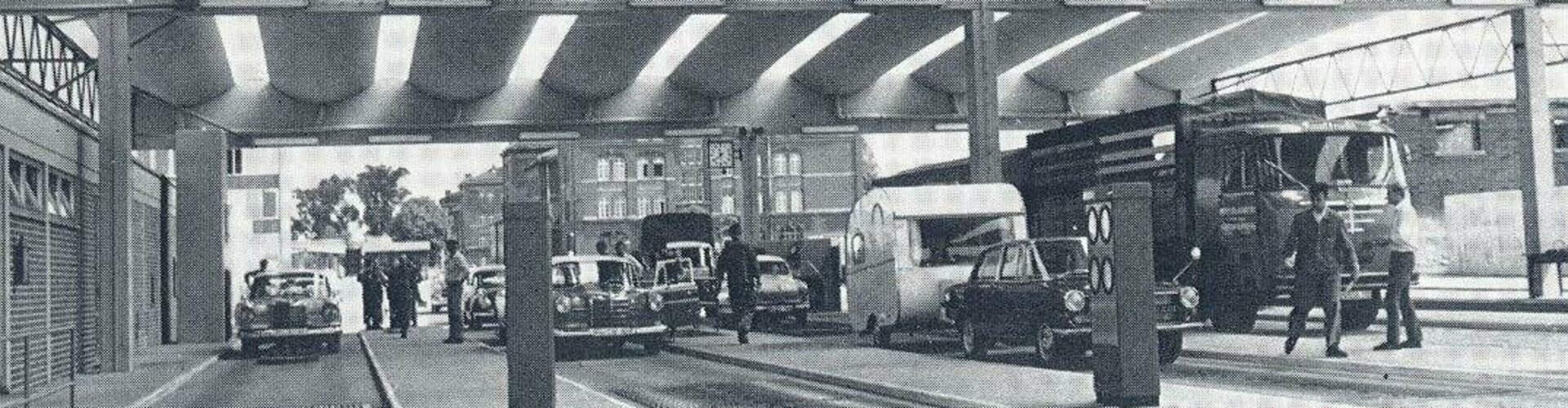

17 November 2021 - 222 vehicle models, 5 age categories, 18 particularly relevant defects and the results of 9.6 million general inspections: These are the key points of the "TÜV-Report 2022". "With the current edition, we are celebrating 50 years of the TÜV-Report," says Dr Joachim Bühler, Managing Director of the TÜV Association in Berlin. "The TÜV-Report has been Germany's most important independent used car guide for five decades. During this time, the magazine has written automotive and technical history." First issue in 1972: On the cover, an Opel GT dashed through a puddle and the magazine featured DAF, NSU and Simca, car brands that have long since disappeared. In the same year, BMW presented its first 5 Series and Mercedes the S-Class. And Volkswagen? Produced its 15 millionth Beetle. A world record! The Beetle thus replaced the Estate as the most-built passenger car model up to that time. The end of an era was already in sight: two years later came the Golf. "The dominant technical issue in the first decades of the TÜV-Report was rust," says Bühler. As late as the mid-1980s, almost every third vehicle inspected by TÜV experts had significant rust, cracking or breakage damage after ten years. Since then, the bodies, which are usually made of steel, have been galvanised. The result: Today, the value for rust damage in this age class is less than one percent.

At that time, road safety in general was not yet very high - quite the opposite. "At the beginning of the 1970s, around 20,000 people were killed in road accidents in Germany every year - and that was with far fewer vehicles on the roads," says Bühler. In 1970, there were about 17 million motor vehicles registered in Germany; today there are more than three times as many, with 59 million vehicles. The installation of seat belts was still voluntary for the manufacturers. In addition to the lack of safety technology, the condition of the vehicles was a real problem. Time and again, technical defects such as burst tyres or failing brakes caused dangerous situations in road traffic," says Bühler. "The TÜV-Report came at exactly the right time. The aim was and is to highlight the weak points of vehicles and thus increase safety on our roads." Today, with 3,000 fatalities in road traffic, there are significantly fewer than back then, but still far too many.

Another topic covered in the first edition was environmental protection. One of the texts already stated at that time: "Air, water and earth have reached a level of pollution that leads to a serious threat to the existence of mankind." The first exhaust emission limits were introduced in Germany in 1970 for the highly toxic carbon monoxide (CO). Carbon dioxide (CO2), known today as a climate killer, was not yet in the focus of politicians at that time. It took until 1985 for the special exhaust emission test (ASU) to become mandatory. And it was not until 2010 that the vehicle emissions test, which has since been renamed exhaust emission test (AU), became an integral part of the TÜV test. "We are currently lobbying politicians to establish a procedure for measuring nitrogen oxides (NOx) in diesel vehicles," says Bühler. In addition, the experts need access to environmentally relevant vehicle data in order to better detect damage and manipulation of the exhaust system.

In the view of the TÜV Association, the main inspection must be constantly developed further in order to keep pace with technical developments. "Today, digitalisation and electrification in particular influence the safety of vehicles. The car is developing into a smartphone on wheels," says Bühler. Thus, more and more digitally controlled assistance systems are on board modern vehicles, which are not yet sufficiently tested. "We expect the first purely electric vehicles in the next edition of the TÜV-Report," says Bühler. Only then will a sufficient number of e-cars have been driven to the test centres to get an objective picture of their condition.

DEFECT RATES Decrease IN TÜV-REPORT 2022

In the current TÜV-Report, 17.9 percent of all vehicles failed the general inspection with "significant" or "dangerous defects". Compared to the previous year, this is 2 percentage points less. The proportion of passenger cars with minor defects fell by 0.5 points to 9.1 percent. Despite this positive trend, there are still many cars on the road that pose a threat to road safety. Around 100,000 vehicles had to go to the workshop immediately with "dangerous defects" such as worn brake discs, badly worn or damaged tyres or a complete failure of the brake lights. And around 10,000 vehicles were immediately taken off the road by the experts as "unsafe for traffic". The overall winner of the TÜV-Report 2022 is once again the Mercedes GLC. The Mercedes B-Class in second place and the Volkswagen T-Roc in third place also make it onto the podium. In the other age categories, the Audi Q2 wins in the 4 to 5 year old category and the Porsche 911 in the 6 to 7 year old category. In the older cars (8 to 9 and 10 to 11 years old), the Audi TT comes out on top.

Since 1998, the TÜV-Report has been published in cooperation with the editorial team of AutoBild. The current issue was published on 12 November 2021 and costs 5.40 euros.